Unsupervised Medical Image Translation with Adversarial Diffusion Models

Jul 17, 2022· ,,,,,·

0 min read

,,,,,·

0 min read

Muzaffer Ozbey

Onat Dalmaz

Salman Dar

Hasan Atakan Bedel

Saban Ozturk

Alper Gungor

Tolga Cukur

Image credit: Unsplash

Image credit: UnsplashAbstract

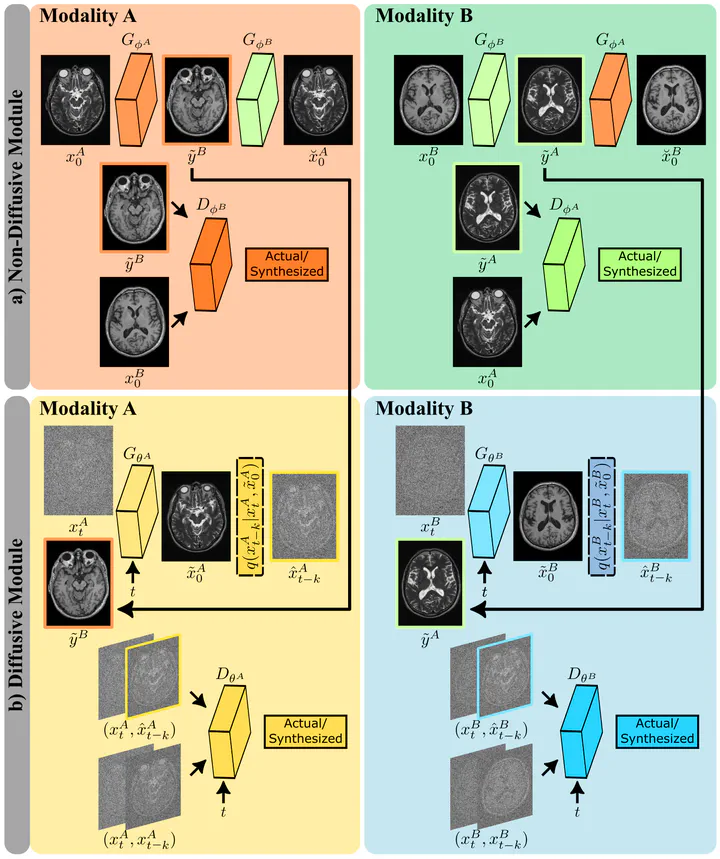

Imputation of missing images via source-to-target modality translation can facilitate downstream tasks in medical imaging. A pervasive approach for synthesizing target images involves one-shot mapping through generative adversarial networks (GAN). Yet, GAN models that implicitly characterize the image distribution can suffer from limited sample fidelity. Here, we propose a novel method based on adversarial diffusion modeling, SynDiff, for improved reliability in medical image synthesis. To capture a direct correlate of the image distribution, SynDiff leverages a conditional diffusion process to progressively map noise and source images onto the target image. For fast and accurate image sampling during inference, large diffusion steps are coupled with adversarial projections in the reverse diffusion direction. To enable training on unpaired datasets, a cycle-consistent architecture is devised with two coupled diffusion processes to synthesize the target given source and the source given target. Extensive assessments are reported on the utility of SynDiff against competing GAN and diffusion models in multi-contrast MRI and MRI-CT translation. Our demonstrations indicate that SynDiff offers quantitatively and qualitatively superior performance against competing baselines.

Type

Publication

under review, IEEE Transactions on Medical Imaging