One Model to Unite Them All: Personalized Federated Learning of Multi-Contrast MRI Synthesis

Jul 13, 2022· ,,,,,,,·

0 min read

,,,,,,,·

0 min read

Onat Dalmaz

Usama Mirza

Gökberk Elmas

Muzaffer Özbey

Salman UH Dar

Emir Ceyani

Salman Avestimehr

Tolga Cukur

Image credit: Unsplash

Image credit: UnsplashAbstract

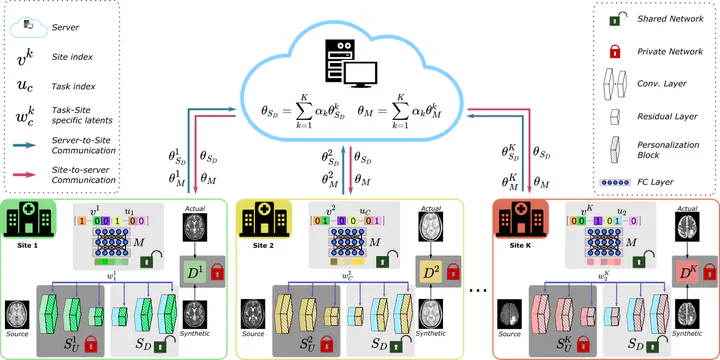

Multi-institutional collaborations are key for learning generalizable MRI synthesis models that translate source- onto target-contrast images. To facilitate collaboration, federated learning (FL) adopts decentralized training and mitigates privacy concerns by avoiding sharing of imaging data. However, FL-trained synthesis models can be impaired by the inherent heterogeneity in the data distribution, with domain shifts evident when common or variable translation tasks are prescribed across sites. Here we introduce the first personalized FL method for MRI Synthesis (pFLSynth) to improve reliability against domain shifts. pFLSynth is based on an adversarial model that produces latents specific to individual sites and source-target contrasts, and leverages novel personalization blocks to adaptively tune the statistics and weighting of feature maps across the generator stages given latents. To further promote site specificity, partial model aggregation is employed over downstream layers of the generator while upstream layers are retained locally. As such, pFLSynth enables training of a unified synthesis model that can reliably generalize across multiple sites and translation tasks. Comprehensive experiments on multi-site datasets clearly demonstrate the enhanced performance of pFLSynth against prior federated methods in multi-contrast MRI synthesis.

Type

Publication

under review, IEEE Transactions on Medical Imaging